Caching

Introduction

RESTHeart speedups the execution of GET requests to collections, i.e. GET /coll, via caching.

This applies when several documents need to be read from a collection and can moderate the effects of the MongoDB cursor.skip() method that slows downs linearly.

RESTHeart allows to Read Documents via GET requests on collections.

GET /test/coll?count&page=3&pagesize=10 HTTP/1.1

HTTP/1.1 200 OK

[

{ <DOC30> }, { <DOC31> }, ... { <DOC39> }

]Documents are returned as paginated result sets, i.e. each request returns a limited number of documents.

Pagination is controlled via the following query parameters:

-

page: the range of documents to return -

pagesize: the number of documents to return (default value is 100, maximum is 1000).

Behind the scene, this is implemented via the MongoDB cursor.skip() method;

The issue is that MongoDB queries with a large "skip" slow down linearly. As the MongoDB manual says:

The

cursor.skip()method is often expensive because it requires the server to walk from the beginning of the collection or index to get the offset or skip position before beginning to return result. As offset (e.g. pageNumber above) increases,cursor.skip()will become slower and more CPU intensive. With larger collections,cursor.skip()may become IO bound.

That is why the MongoDB documentation section about skips suggests:

Range queries can use indexes to avoid scanning unwanted documents, typically yielding better performance as the offset grows compared to using

skip()for pagination.

How it works

If the request contains the query parameter ?cache, than the query cursor used to retrieve the pagesize documents is cached. This allows to cache up to batchSize documents.

A subsequent request, on the same collection, and with the very same filter, sort, hint, etc. parameters can reuse the cached cursor, avoiding the need to query MongoDB, and speeding up the request.

|

Note

|

as with any caching system, the performance gain comes at a price. The cached documents pages can be obsolete, since cached documents could have been modified or deleted and new documents could have been created. |

Configuration

The following configuration applies on caching

mongo:

# get collection cache speedups GET /coll?cache requests

get-collection-cache-size: 100

get-collection-cache-ttl: 10_000 # Time To Live, default 10 seconds

get-collection-cache-docs: 1000 # number of documents to cache for each request| parameter | description | default value |

|---|---|---|

|

the maximum number of cached cursors |

100 |

|

the time in milliseconds that an cursor is cached before it’s deleted |

10000 (10 seconds) |

|

the number of documents to cache per each query (must be >= |

1000 |

Results

The issue 442 on github, has the following performance results on a collection with 6+ millions documents.

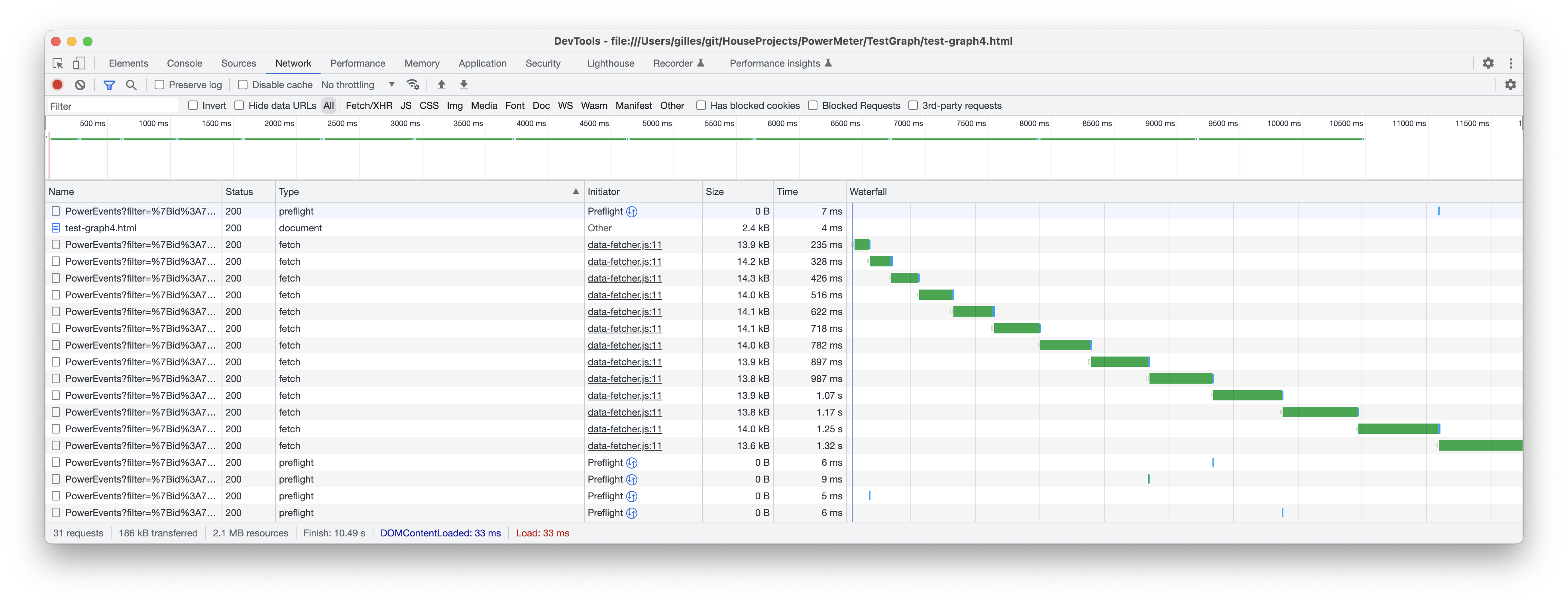

Without caching (total time = ~12 seconds):

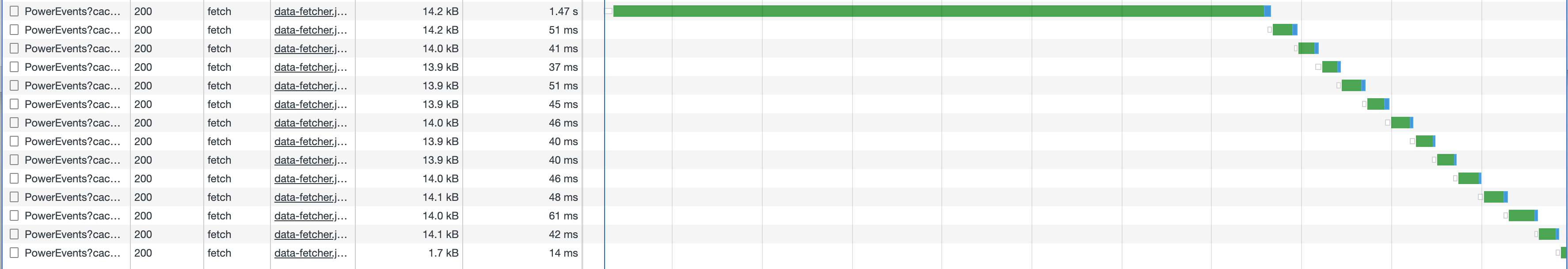

With caching (total time = ~2 seconds):

Cache consistency with transactions

To make sure that requests using caching return consistent data, transactions can be used, since the isolation property of transactions guarantees consistency.

create a session:

POST /_sessions HTTP/1.1

...

Location: http://localhost:8009/_sessions/11c3ceb6-7b97-4f34-ba3f-689ea22ce6e0start a transactions

POST /_sessions/11c3ceb6-7b97-4f34-ba3f-689ea22ce6e0/_txns HTTP/1.1

HTTP/1.1 201 Created

Location: http://localhost:8009/_sessions/11c3ceb6-7b97-4f34-ba3f-689ea22ce6e0/_txns/1get data in the transaction with caching

GET /coll?sid=11c3ceb6-7b97-4f34-ba3f-689ea22ce6e0&txn=1&cache&page=3&pagesize=10 HTTP/1.1

HTTP/1.1 200 OK

[

{ <DOC30> }, { <DOC31> }, ... { <DOC39> }

]GET /coll?sid=11c3ceb6-7b97-4f34-ba3f-689ea22ce6e0&txn=1&cache&page=4&pagesize=10 HTTP/1.1

HTTP/1.1 200 OK

[

{ <DOC40> }, { <DOC41> }, ... { <DOC49> }

]abort the transaction (since no data has been modified)

DELETE /_sessions/11c3ceb6-7b97-4f34-ba3f-689ea22ce6e0/_txns/1 HTTP/1.1

HTTP/1.1 204 No Content